mjorud

Members-

Posts

54 -

Joined

-

Last visited

Converted

-

Gender

Undisclosed

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

mjorud's Achievements

Rookie (2/14)

3

Reputation

-

Hi folks, I used to be a gamer until my mid twenties. Work and family, life as you might call it, "took over". A year or so ago Ron Gilbert released Return to Monkey Island, and man... it took me back to the good old times with adventure games, Lucas Arts, Sierra, Larry, Roger Wilco, Sam'n'Max and so forth. Memory lane... Anyway. Return to Monkey Island woke up the dorment gamer in me and I have played Fallen Order, Survivor, The Last of Us and I'm currently working my way through Alan Wake 2 (scary or what?)... and I'm really looking forward to Outlaws! Not sure why I'm sharing my life story... anyway... to make a long story a bit longer. My current setup is - Intel i7-12700K - 64 GB DDR4 - RTX 4070 Super - Samsung 990 Pro 2 TB (NVMe (passed)) I have pinned 5 P-cores to the Gaming VM (W11) and 32 GB RAM. Pinning 6 cores does not give me any notable performance gain in-game. To play I'm using Sunshine and Moonlight on Apple TV with Xbox 360 controller. Works great, and would work even better if Nvidia fixed the HAGS issue. With RTSS running I see that the GPU is running at ~70% in most games. Will upgrading the CPU to i9-14900K (or even i7-14700K) increase performance? An increase in 1% low FPS would be great. From my limited knowledge about bottlenecking it seems that the CPU is "to blame". I still have the same showstoppers (and a dog) that put the gamer in me to sleep, so when I actually have the time to disembowl some Imperial bastards, find the secret of Monkey Island or shit my pants reading Alan's manuscripts I want to do it with all graphical settings set to HIGH. To summarise... will upgrading from i7-12700K to i9-14900K give me a boost in 1% low FPS? Best regards...

-

VM GPU passthrough resizable BAR support in 6.1 kernel

mjorud replied to Ancalagon's topic in Feature Requests

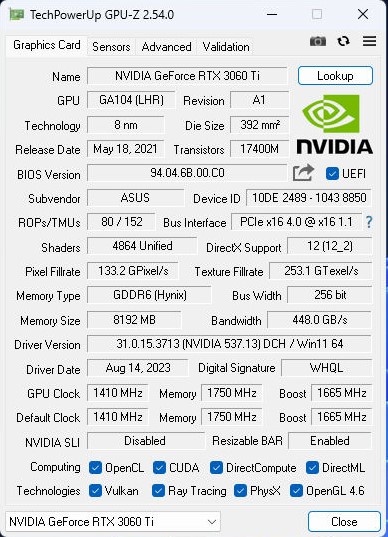

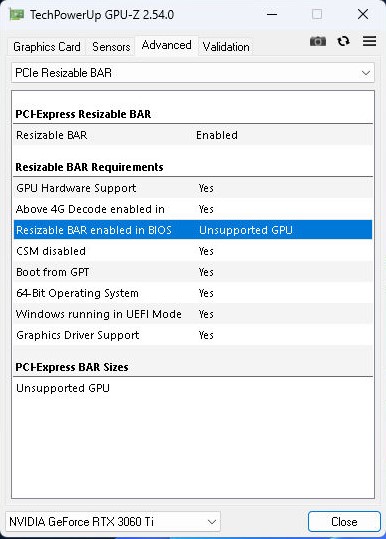

I have see the same thing. GPU-Z shows that Resizable BAR is enabled, even though "Resizable BAR enabled in BIOS" shows Unsupported GPU. Nvidia shows that Resizable BAR is enabled with 8192 MB GDDR6 dedicated video memory and 24572 MB total available graphics memory. Does ReBAR work? Not sure, and I'm not sure how to confirm it in-game. -

VM GPU passthrough resizable BAR support in 6.1 kernel

mjorud replied to Ancalagon's topic in Feature Requests

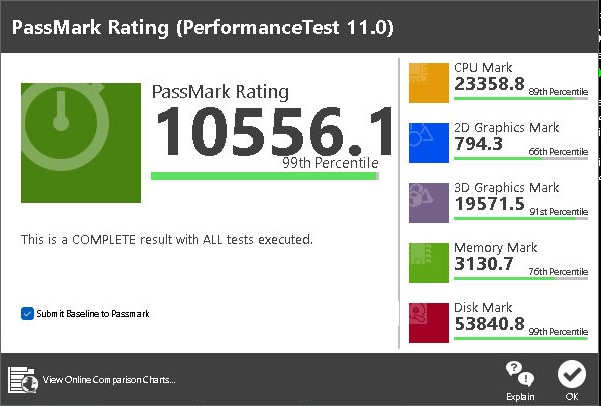

I updated from 6.12.3 to 6.12.4-rc19 today and now I have the resource1_resize folder. Ran the script, but I still have "Unsupported GPU" in GPU-Z. A bonus is that I have seen a 13% increase for both 2D and 3D when running PassMark PerformanceTest after the update. -

VM GPU passthrough resizable BAR support in 6.1 kernel

mjorud replied to Ancalagon's topic in Feature Requests

I'm trying the patch but for some reason I'm missing the "resource1_resize" under "/sys/bus/pci/devices/0000\:01\:00.0/". "cat resource1_resize" only gives me "cat: resource1_resize: No such file or directory". I assume that my problem lies there? But if i run "lspci -vvvs 01:00.0 | grep "BAR"" I get: Capabilities: [bb0 v1] Physical Resizable BAR BAR 0: current size: 16MB, supported: 16MB BAR 1: current size: 8GB, supported: 64MB 128MB 256MB 512MB 1GB 2GB 4GB 8GB BAR 3: current size: 32MB, supported: 32MB So by the look of that, everything looks OK. -

VM GPU passthrough resizable BAR support in 6.1 kernel

mjorud replied to Ancalagon's topic in Feature Requests

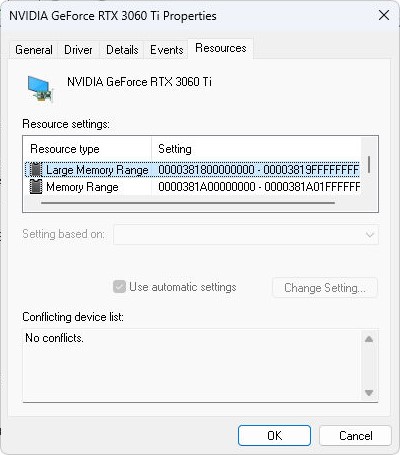

Hi, I have been tinkering with ReBAR and general performance in a W11 Gaming VM. Hardware is i7-12700K, Asus PRIME Z690M-PLUS D4 and Asus RTX 3060 Ti Dual Mini v2. The GPU does support ReBAR. The VM has 12 CPUs and 32 GB RAM allocated. Samsung 990 Pro is passed through as the only disk. The VM is working fine. Not great, but fine. I say fine because it's a hugh step down from bare metal in regard of benchmarks. A little overhead is expected though. I have followed this and other threads and I think I have done everything correctly. GPU-Z shows that ReBAR is enabled... but that the GPU is unsupported. The VM in installed on a NVMe so when booting bare metal the all is good; the GPU is supported. Looking in the Device Manager, Large Memory Range is there. I have to admit that I have not yet tried the user script... This is my results from PerformanceTest. Best regards J -

mjorud started following [6.12.0-rc4.1]winbindd and network loss

-

Not sure if this is related to the RC, but as I'm running RC... I was watching a movie using Plex Docker and all of a sudden the movie stopped. The Plex container and also the unraid webgui was not accessable. This has happened once before but I did not investigate it. Excerpt from the syslog: May 1 20:41:33 nansen emhttpd: read SMART /dev/sdf May 1 20:45:28 nansen winbindd[6714]: [2023/05/01 20:45:28.136962, 0] ../../source3/winbindd/winbindd.c:821(winbind_client_processed) May 1 20:45:28 nansen winbindd[6714]: winbind_client_processed: request took 128.989669 seconds May 1 20:45:28 nansen winbindd[6714]: [struct process_request_state] ../../source3/winbindd/winbindd.c:437 [2023/05/01 20:43:19.070099] ../../source3/winbindd/winbindd.c:618 [2023/05/01 20:45:28.059768] [128.989669] -> TEVENT_REQ_DONE (2 0)) May 1 20:45:28 nansen winbindd[6714]: [struct resp_write_state] ../../nsswitch/wb_reqtrans.c:307 [2023/05/01 20:45:27.496398] ../../nsswitch/wb_reqtrans.c:344 [2023/05/01 20:45:28.059766] [0.563368] -> TEVENT_REQ_DONE (2 0)) May 1 20:45:28 nansen winbindd[6714]: [struct writev_state] ../../lib/async_req/async_sock.c:267 [2023/05/01 20:45:27.496399] ../../lib/async_req/async_sock.c:373 [2023/05/01 20:45:27.509262] [0.012863] -> TEVENT_REQ_DONE (2 0)) May 1 21:15:33 nansen emhttpd: spinning down /dev/sdh May 1 21:15:33 nansen emhttpd: spinning down /dev/sdd I think it took approx. 2 minutes before the server came online again. Tried to google a bit but came up short. Any idea what's happening? Thank you in advance. nansen-diagnostics-20230501-2141.zip

-

It works as expected and no longer backups volume mappings other than \appdata\. The old version of the backup plugin required the CA Auto Update Applications plugin to be installed (if I remember correctly). Do this new version also require that plugin?

-

Do Appdata Backup also backup all Docker volume mappings? My appdata\binhex-delugevpn is 73 MB but I had to stop the backup process when the backed up archive grew over 200 GB. This also happened to several other Dockers. Please find the debug attached. ab.debug.log

-

WARNING: some attributes cannot be read from corsair-cpro kernel driver WARNING: some attributes cannot be read from corsair-cpro kernel driver Corsair Commander Pro ├── Temperature probe 1 No ├── Temperature probe 2 No ├── Temperature probe 3 No ├── Temperature probe 4 No ├── Fan 1 control mode PWM ├── Fan 2 control mode PWM ├── Fan 3 control mode DC ├── Fan 4 control mode PWM ├── Fan 5 control mode DC └── Fan 6 control mode DC ASUS Aura LED Controller (experimental) └── Firmware version AULA3-AR32-0207 Corsair Commander Pro ├── Temperature probe 1 No ├── Temperature probe 2 No ├── Temperature probe 3 No ├── Temperature probe 4 No ├── Fan 1 control mode PWM ├── Fan 2 control mode PWM ├── Fan 3 control mode DC ├── Fan 4 control mode PWM ├── Fan 5 control mode DC └── Fan 6 control mode DC ASUS Aura LED Controller (experimental) └── Firmware version AULA3-AR32-0207 This is what the log file outputs now. Please note that the ASUS Aura LED Controller is popping up because I have just upgraded the motherboard. I just found out (and I have been using Unraid for 10 years or so) that Unraid has native support for the Corsair Commander Pro. Atleast all fans are visible in the Dashboard.

-

The Docker is up to date, and my config.yaml only contains controller: type: 'commander' fan_sync_speed: '100' I do not have the Kraken.

-

This is the config.yaml controller: type: 'commander' fan_sync_speed: '100' And after the latest update this is what the log says WARNING: some attributes cannot be read from corsair-cpro kernel driver Usage: liquidctl [options] list liquidctl [options] initialize [all] liquidctl [options] status liquidctl [options] set <channel> speed (<temperature> <percentage>) ... liquidctl [options] set <channel> speed <percentage> liquidctl [options] set <channel> color <mode> [<color>] ... liquidctl [options] set <channel> screen <mode> [<value>] liquidctl --help liquidctl --version Usage: liquidctl [options] list liquidctl [options] initialize [all] liquidctl [options] status liquidctl [options] set <channel> speed (<temperature> <percentage>) ... liquidctl [options] set <channel> speed <percentage> liquidctl [options] set <channel> color <mode> [<color>] ... liquidctl [options] set <channel> screen <mode> [<value>] liquidctl --help liquidctl --version Corsair Commander Pro ├── Temperature probe 1 No ├── Temperature probe 2 No ├── Temperature probe 3 No ├── Temperature probe 4 No ├── Fan 1 control mode PWM ├── Fan 2 control mode PWM ├── Fan 3 control mode DC ├── Fan 4 control mode PWM ├── Fan 5 control mode DC └── Fan 6 control mode DC If I open console and write liquidctl status # liquidctl status Corsair Commander Pro ├── Fan 1 speed 1369 rpm ├── Fan 2 speed 1380 rpm ├── Fan 3 speed 1197 rpm ├── Fan 4 speed 1351 rpm ├── Fan 5 speed 1224 rpm ├── Fan 6 speed 1211 rpm ├── +12V rail 12.01 V ├── +5V rail 4.97 V └── +3.3V rail 3.36 V # it seems to work just fine though.

-

That was quick. This is my config.yaml controller: type: 'commander' fan_speed: '100' And this is what the log file shows: WARNING: some attributes cannot be read from corsair-cpro kernel driver Usage: liquidctl [options] list liquidctl [options] initialize [all] liquidctl [options] status liquidctl [options] set <channel> speed (<temperature> <percentage>) ... liquidctl [options] set <channel> speed <percentage> liquidctl [options] set <channel> color <mode> [<color>] ... liquidctl [options] set <channel> screen <mode> [<value>] liquidctl --help liquidctl --version Usage: liquidctl [options] list liquidctl [options] initialize [all] liquidctl [options] status liquidctl [options] set <channel> speed (<temperature> <percentage>) ... liquidctl [options] set <channel> speed <percentage> liquidctl [options] set <channel> color <mode> [<color>] ... liquidctl [options] set <channel> screen <mode> [<value>] liquidctl --help liquidctl --version Corsair Commander Pro ├── Temperature probe 1 No ├── Temperature probe 2 No ├── Temperature probe 3 No ├── Temperature probe 4 No ├── Fan 1 control mode PWM ├── Fan 2 control mode PWM ├── Fan 3 control mode DC ├── Fan 4 control mode PWM ├── Fan 5 control mode DC └── Fan 6 control mode DC I would assume the log should show something like this: Corsair Commander Pro ├── Fan 1 speed 1357 rpm ├── Fan 2 speed 1372 rpm ├── Fan 3 speed 1193 rpm ├── Fan 4 speed 1325 rpm ├── Fan 5 speed 1227 rpm ├── Fan 6 speed 1210 rpm ├── +12V rail 12.01 V ├── +5V rail 4.97 V └── +3.3V rail 3.36 V Best regards

-

mjorud started following mplogas - Support Thread for container(s)

-

I have been looking for a liquidctl Docker that supports Corsair Commander Pro. The LaaC-folder created under \appdata is created by root:root instead of nobody:users. This makes it hard to create config.yaml using the share. Adding PUID 99 and PGID 100 helped. I can't wrap my head around the yaml-file. All I want is to run liquidctl set sync speed 100 to make all six fans run at maximum. The server is located in the outhouse so noise is of no consern. Thank you for the Docker.

-

I did some more testing. Assigning all CPU/Threads to the VM I get a Passmark CPU score of 10200. That's a bump from 7300. 3DMark average score goes from 7900 to 8800. CPU score from 3460 to 4820. No change in GPU score. Not sure what kind of impact this does on the host. I assume that the host need some horsepower to run VM services and other services...

-

Hi ghost82, True, the bare metal benchmarks I compare my results with are full 12 threads while I only use 8. Because of this I do expect a reduced results, but not as low as I get. I ran latencymon and my "system appears to be suitable for handling real-time audio and other tasks without dropouts". I also ran WhySoSlow and all seems good except for "The highest measured SM BIOS interrupt or other stall was 156 microseconds. This is considered poor behaviour". Not sure if this really is a problem.