Sparkum

Members-

Posts

54 -

Joined

-

Last visited

Converted

-

Gender

Undisclosed

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

Sparkum's Achievements

Rookie (2/14)

2

Reputation

-

Alright now please take everything I have as a guideline cause I may have set it up all wrong or not in an "optimized way" but hey, it works! Alright I also did stuff over a period so prob some stuff in stupid ways, I'm also going to assume you have your drives mounted with rclone, additionally I did not encrypt, its esentially the same just point to your encrypted folders. create a google API key? or whatever its called, google that. -Created a folder in appdata called "plexdrive" -created a folder in there called "tmp" -I copied plexdrive from starbix's plugin into the plexdrive folder, and called it "plexdrive" (additionally could just install it from plexdrive github, this was one of those things that when I started I didnt know) -chmod -755 /mnt/user/appdata/plexdrive/plexdrive -create a file in your plexdrive folder called "config.json" and in it put your client ID and secret from google API { "clientId": "ID goes here", "clientSecret": "Client Secret goes here" } So you should now have a folder /mnt/user/appdata/plexdrive and inside it should be the file plexdrive and config.json and the folder tmp then here are my mounting scripts /mnt/user/appdata/plexdrive/plexdrive -o allow_other -c /mnt/user/appdata/plexdrive -t and /mnt/user/appdata/plexdrive/tmp /mnt/disks/plxdrive/ & That should get you a working plexdrive, mine mounts to /mnt/disks/plxdrive And here is my rclone mount for my google drive so I can access it outside of plexdrive's read only. rclone mount --allow-other --allow-non-empty plexdrive:/ /mnt/disks/plexdrive/ & So at this point you should have a fully working plexdrive (read only) and a read/write google drive Add these to your SMB [plexdrive] path = /mnt/disks/plxdrive comment = browseable = yes # Public public = yes writeable = yes vfs objects = [plexdriveRW] path = /mnt/disks/plexdrive comment = browseable = yes # Public public = yes writeable = yes vfs objects = To easily wrose your folders. And then (if you wish) your gonna want to make a union mount so that plex will see local and cloud data as the same. So for me I make a folder: /mnt/user/Media/Plex/TV (this folder was empty when I started) I created a new folder in disks /mnt/disks/plexdriveunion/ And then I merged those 2 and my plxdrive all together I installed starbix's union plugin but I also went to the nerdpack plugin and installed fuse that way. unionfs -o cow,allow_other /mnt/user/Media/Plex/=RW:/mnt/disks/plxdrive/Media/Plex/=RO /mnt/disks/plexdriveunion/ & So now your /mnt/disks/plexdriveunion folder will have some data in it. Add SMB [plexdriveunion] path = /mnt/disks/plexdriveunion comment = browseable = yes # Public public = yes writeable = yes vfs objects = Your /mnt/user/media/Plex/TV folder will now have a hiiden folder in it. Point everything, plex, sonarr, etc to /mnt/disks/plexdriveunion and off you go, they will try to write to your local copy and then you simply rclone to the cloud. I'm personally using rclone move --transfers 10 --exclude .unionfs/** --min-age 30d /mnt/user/Media/Plex/TV/Adult plexdrive:/Media/Plex/TV/Adult Sorry this jumps all over the place but hopefully it poitns you in the right direction, feel free to ask follow up questions.

-

The plugin places the file "plexdrive" in the /usr/sbin/ folder, so you would just run your command pointing at that. I just spent like a week hitting my head against a wall so let me know if you want more details on how I got plexdrive working A-Z

-

Thanks for your plugins got plexdrive all up and running!

-

Hey Starbix. I would love your unionfs plugin as well. Figuring it out is another thing but I would appreciate it for sure. And thanks for your first plugin!

-

Sparkum started following [Plugin] Ransomware Protection - Deprecated and Plexdrive

-

Hey all. A plugin for plexdrive I think would be awesome, its a twist to rclone that helps to avoid the APi bans.

-

Well I went at it again tonight, so the first drive to fail is completely dead. Essentially I keep getting the message that there is a hardware problem, alright drive died as expected, it was old. The second drive, I was able to get going once I started using sdm1, got it mounted, however its only showing 1GB of data, It is what it is, 99% of what was on that disk wasnt something to worry about, .5% that truly mattered I have in multiple places, and the remaining .5% just kinda sucks. I honestly dont dont know if I can 100% say anything bad about Unraid, all I know is days after it all happened files were not there, from multiple shares, so that was my logical assumption. I know everyone said thats impossible but in my mind it all makes sense. This is not my first drive failure (or kick out rather) and everytime unraid has worked perfectly, Thanks all for your help! I atleast got the answers I wanted.

-

Alright thanks guys, Sorry ya I definitely skimmed the page, I'll read it in full before trying again tonight. And thanks again for mentioning the partition thing, I guess I assumed (my bad for not reading) that I was simply doing it on the disk not the partition. Hopefully I didn't make my problem worse, but atleast I can say its because of me and not Unraids product,

-

Gah =/ Thanks! I'll try again tonight!

-

Also just went through the same process with the second disk and received... root@Tower:/mnt/disks# reiserfsck --fix-fixable /dev/sdm reiserfsck 3.6.24 Will check consistency of the filesystem on /dev/sdm and will fix what can be fixed without --rebuild-tree Will put log info to 'stdout' Do you want to run this program?[N/Yes] (note need to type Yes if you do):Yes ########### reiserfsck --fix-fixable started at Sun Mar 12 22:58:43 2017 ########### Replaying journal: The problem has occurred looks like a hardware problem. If you have bad blocks, we advise you to get a new hard drive, because once you get one bad block that the disk drive internals cannot hide from your sight,the chances of getting more are generally said to become much higher (precise statistics are unknown to us), and this disk drive is probably not expensive enough for you to you to risk your time and data on it. If you don't want to follow that follow that advice then if you have just a few bad blocks, try writing to the bad blocks and see if the drive remaps the bad blocks (that means it takes a block it has in reserve and allocates it for use for of that block number). If it cannot remap the block, use badblock option (-B) with reiserfs utils to handle this block correctly. So that disk is pretty black and white.

-

Thanks for this. So initially I ran xfs_repair -nv /dev/sdm To which I was told Phase 1 - find and verify superblock... bad primary superblock - bad magic number !!! attempting to find secondary superblock... ............................................................................................................................................................................................................................................................................................................................................................................................................................Sorry, could not find valid secondary superblock Exiting now. followed by reiserfsck --rebuild-sb /dev/sdm Went through all the questions the first time (didnt copy and paste that) But the following times that I run it now I am greeted with reiserfsck 3.6.24 Will check superblock and rebuild it if needed Will put log info to 'stdout' Do you want to run this program?[N/Yes] (note need to type Yes if you do):Yes Reiserfs super block in block 16 on 0x8c0 of format 3.6 with standard journal Count of blocks on the device: 488378640 Number of bitmaps: 14905 Blocksize: 4096 Free blocks (count of blocks - used [journal, bitmaps, data, reserved] blocks): 0 Root block: 0 Filesystem is NOT clean Tree height: 0 Hash function used to sort names: not set Objectid map size 0, max 972 Journal parameters: Device [0x0] Magic [0x0] Size 8193 blocks (including 1 for journal header) (first block 18) Max transaction length 1024 blocks Max batch size 900 blocks Max commit age 30 Blocks reserved by journal: 0 Fs state field: 0x1: some corruptions exist. sb_version: 2 inode generation number: 0 UUID: c462cb69-dfa8-4482-8861-bf2588d00976 LABEL: Set flags in SB: Mount count: 1 Maximum mount count: 30 Last fsck run: Sun Mar 12 22:33:51 2017 Check interval in days: 180 Super block seems to be correct At this point I am kinda assuming SOL?

-

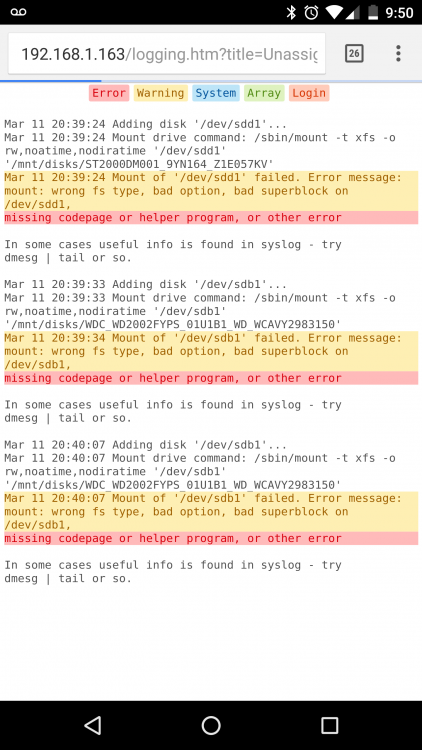

The disks in question are removed, the parity is rebuilt with replacement drives and working perfectly. The disks have been placed back into my computer AND another computer and added with unassigned disks plugin but I have been unable to mount them. As I type this though, with what you said, there is someone additional I can do when I mount into maintenance mode inst there? A way to skip corruption or something like that?

-

No sorry, I knew every second of the way, I didnt know for a couple days that there was lost data though. Drive kicked out - notified. New drive in and rebuild started Woke up to notification that new drive was kicked out. Put next new drive in rebuilt sucessfully Moved on with my life ~5 days go by and people start asking where stuff is. Month+ later not trying to figure out what was on those drive (and plan a backup computer)

-

I cant imagine any logs I can provide are going to be of any use. This happened near the beginning of February, and then was noticed probably about 5 days later when people started asking me where a bunch of stuff went. Media missing, files from my wifes shares gone etc. I started diagnosing near the end of Febuary, kinda failed and am giving it another shot now. Additionally I turned my desktop into an Unraid machine last night, so new cords, no raid card mobo connection only, etc and installed unassigned disks and same results with both disks. My array is fully rebuilt and has been for a month, its just a matter of recovery now.

-

Sorry, to be more specific what it did after the second did was it emulated the ~180GB that the parity had recovered.