bhinkle50

Members-

Posts

48 -

Joined

-

Last visited

Converted

-

Gender

Undisclosed

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

bhinkle50's Achievements

-

New build using ASUS Prime z790-p board. I have everything configured and I can consistently get it to boot off the usb if I go into the bios menu at startup and then exit making no changes. On the restart it will boot off the USB. If I just reboot and don't go into the bios menu, it won't boot off the usb. I'm sure there's a setting I'm missing, but haven't done a build in awhile so not sure if I'm missing something obvious.

-

VM not starting after attempting to change GPU passthrough

bhinkle50 replied to bhinkle50's topic in VM Engine (KVM)

Tried switching to the VNC and then back again for this VM. Here's the libvrt log entries. I'm kind of at a loss what to do to recover. 2023-05-25 15:10:49.636+0000: 13707: error : qemuDomainAgentAvailable:8610 : Guest agent is not responding: QEMU guest agent is not connected 2023-05-25 15:11:05.861+0000: 13702: error : virNetSocketReadWire:1791 : End of file while reading data: Input/output error 2023-05-25 15:12:23.758+0000: 22579: error : qemuMonitorIORead:440 : Unable to read from monitor: Connection reset by peer 2023-05-25 15:12:23.759+0000: 22579: error : qemuProcessReportLogError:2056 : internal error: qemu unexpectedly closed the monitor: 2023-05-25T15:12:23.730373Z qemu-system-x86_64: -device pcie-pci-bridge,id=pci.8,bus=pci.1,addr=0x0: Bus 'pci.1' not found 2023-05-25 15:14:29.607+0000: 13803: error : virPCIGetHeaderType:2897 : internal error: Unknown PCI header type '127' for device '0000:04:00.0' 2023-05-25 15:14:45.234+0000: 13707: error : qemuDomainAgentAvailable:8610 : Guest agent is not responding: QEMU guest agent is not connected -

So this was a pretty weird one. It started in the AM with my server losing it's network connection. I also experienced some other networking issues which caused me to reboot the network and at the same time I rebooted my server. The server began to hang at the boot steps above, so I believed the issue was all related to my server. Then I had to reboot my network again, and again, and again. Now I can't get my server to boot, and my router appears to be going out. I pulled every piece of hardware out of the server. 17 drives and 4 exp cards. I then went one by one. It started booting!!!! With that success, I slowly added pieces back into the mix. I eventually got it to boot fully - ran a parity check, backed up VMs, appdata, and flash. I then rebooted and it STOPPED again, but this time the flash didn't even load to get to the boot. Restarted again, and it got to a different part before it stopped. Then it dawned on me that my flash is obviously failing. So I swapped out the flash drive, booted perfectly, I transferred the key and it has been rock solid. Well, until I started messing with the VMs in prep for my migration and screwed it all up. But that's another thread that you can see here. Oh, almost forgot the best part. The original networking issue? It was because ASUS sucks at devops. They released a security file to their routers that consumed all the memory and caused routers to consistently crash. They suck and you can read about it here.

-

I am preparing to migrate to a new server and thought I could switch my VMs GPU to the other one that I have in my case but after making the switch, I remembered the reason I had this other GPU in there was because it's a server board with no graphics, so it's the system video. The reason I was trying to make the switch is I wanted to free up my 1060 NVIDIA card to run vdarr to convert my media library to h265. So I installed the NVIDIA plug in, moved the 710 GPU to the VM, and then realized that I couldn't pass that one through. So I uninstalled the NVIDIA plugin, remapped the VM to the 1060, but it won't come up. I've turned VM and Docker off and on, and rebooted server a few times. I am getting the following error in the libvrt log: 2023-05-25 02:30:48.049+0000: 13707: error : qemuDomainAgentAvailable:8610 : Guest agent is not responding: QEMU guest agent is not connected Diagnostics attached. Any thoughts appreciated. hydra-diagnostics-20230524-2138.zip

-

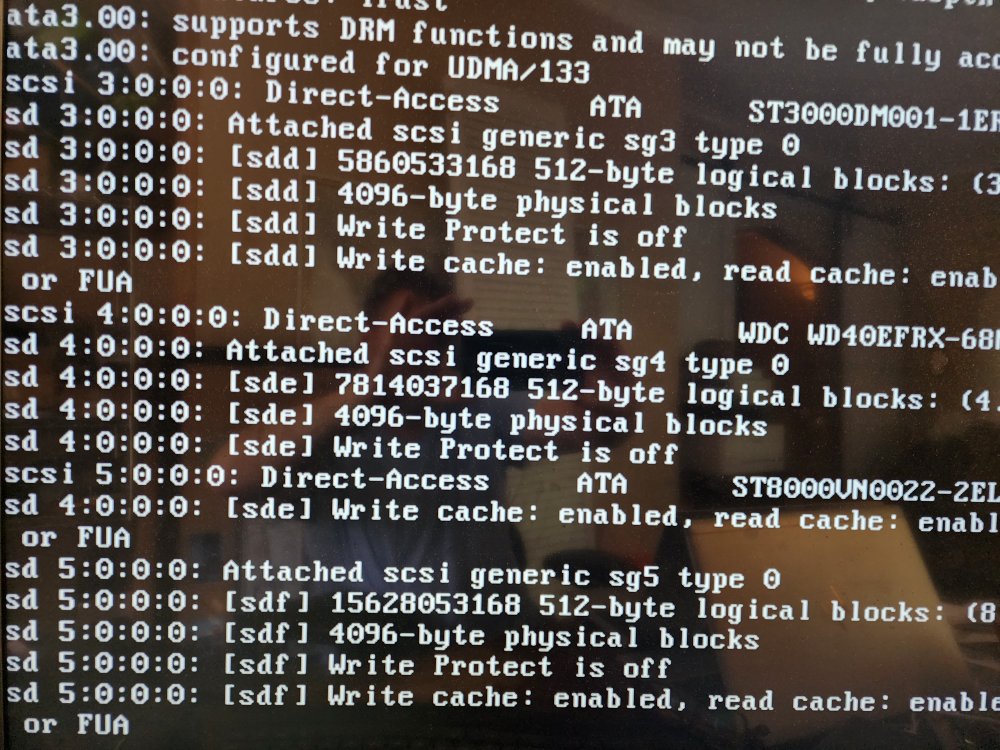

I woke up this morning to a VM that had no ethernet. I rebooted the server to see if it would clear it up and it had same issue once it came back up. I went into network settings and it showed that one of my ports was down and it required me to shut down Docker and VMs to edit network settings to remove that port. I made a change to disable the port, but the server didn't appear to take the save and then hung. I did a hard power down and the server isn't coming back up. It appears to keep getting stuck on the mount of disks, but it isn't consistent which disk it stops on. I have pulled all cables and reseated them to make sure it's not a cable issue. I'm currently trying to reboot in safe mode and it was stuck at the step in the photo for 15 min. I have since powered off and pulled the diagnostics from the last successful boot. Any thoughts are appreciated. Thanks. hydra-diagnostics-20230517-1114.zip

-

Thanks. I knew it was something completely easy like that. I have been directory clean up mode and was ///// happy. All good now.

-

I did some clean up on my array and upgraded my cache drives but I can't get my VM manager to restart. For some reason I can't get it to create libvrt.img. I'm pausing here to ask for someone to look at my current config to see if I've deleted something that I need to bring back. I've restarted numerous times and can't seem to get the VM manager to come back. Any assistance is appreciated. TIA hydra-diagnostics-20180729-1335.zip

-

Exactly what I'm using. I got a bit panicked when I first saw what was going on and couldn't find anything with my initial search so I made this post. I then found your post, and am following now. I'm at the step where my copy is done, and I'm getting ready to mount the cache pool again and copy back. Thanks for your help.

-

I did have 3, and it appears 2 of them got directory corruption. I mounted 1 of the drives in a degraded state and am in process of copying the cache data over to the array and then I plan to rebuild the cache pool. I think that seems to be the best course of action.

-

I believe I had a cable come loose on a cache drive and I restarted the array without the drive twice and it now is presenting me the missing cache pool drive as a new device. If I attempt to add it back to the cache pool it wants to format the cache pool. How can I get my cache pool back? hydra-diagnostics-20180713-1053.zip

-

I'm having the same issue on a rebuilt Win10 VM. I changed out the graphics card and ensured MSI+ and that had no impact. Really odd behavior. Definitely did not happen on any of my previous VMs. Hopefully someone can identify a potential solution. Edited to add that I built 2 more VMs and it is happening on all of them.

-

I upgraded my MB and dedicated GPU this week. Previously I was running fine with an AMD R7 but wanted to switch to an NVIDIA GTX 1060 I picked up. The overall MB switch went well, but I can't get the passthrough to work with the new GPU. I followed @gridrunner video and have captured my rom and edited my XML to reflect it. I've also pci-stubbed the new card in my syslinux file. I've attached my XML and my server log. Any help would be appreciated. unraid-diagnostics-20170908-1637.zip win10 xml.txt

-

Thanks for the quick reply. I had re-downloaded the cert and openvpn file, but I guess I didn't get the openvpn file copied correctly as I just deleted them and re-did it and it's working. Thanks again.

-

I'm getting the no remote error as well. I removed the container and re-installed and it's still present. Attached is my supervisord.log file. I don't see the script it's referencing in the /config directory, but I may not have followed the container paths correctly. supervisord.log